Who is drop-in replacement of

From MySQL to Percona or MariaDB ...and back

As we all know MariaDB starts as a fork of MySQL and then slowly diverges until becoming a different product.

Still I often catch at conferences that MariaDB is a drop-in replacement for MySQL (https://en.wikipedia.org/wiki/Drop-in_replacement). This for me is a bold statement given drop-in-replacement: “It refers to the ability to replace one hardware (or software) component with another one without any other code or configuration changes being required and resulting in no negative impacts”. Which by inheritance also means I can go backwards.

In short if MariaDB is a real drop-in replacement, we should be able to replace the MySQL binaries with the ones coming from MariaDB and then roll back without any issue.

This short article is the result of my notorious disbelieving about any kind of bold marketing statements.

To be clear I am NOT going to compare the functionalities of the different products, I just want to see if I can replace one with the other.

The tests

What and how

For the tests I will use the latest version of:

- MySQL 5.7

- Percona Server 5.7

- MariaDb 10.3

And for the newest:

- MySQL 8.0.22 (8.0.23 is out but PS is not yet, so we cannot compare)

- Percona Server 8.0.22

- MariaDB 10.5

I will have a source pointing to a symbolic link named /opt/mysql_templates/magic then I will replace the target of the link pointing to the different binaries.

What I will do is simple:

- Point to MySQL

- Create a new instance

- Create the world schema and load data (using Innodb)

- Select count(*) from world.City;

- SET GLOBAL innodb_fast_shutdown=0;

- Stop instance

- Point link to Percona

- Start instance

- Select count(*) from world.City;

- Drop world

- Repeat all steps from #3 and point to MariaDB

- Once the MariaDB test is done, point to MySQL and repeat.

Will run the same tests for the 5.7/10.3 series and for the 8.0.22/10.5.

The expectation to be drop-in-replacement is to be able to move from MySQL to Percona Server to MariaDB and back to MySQL. Anything diverging will prove we are NOT dealing with drop-in.

Version 5.7/10.3

MySQL

Shift to Percona:

No errors at all in the log

Let us go backwards to MySQL

No issue at all

Let us try to move to MariaDB 10.3:

So at first attempt it failed, and had to modify my.cnf removing GTID config as Performance schema (see reference section about that).

Once done:

Working but needing to run innodb_update, once done, I had a lot of errors related to the Performance schema, and still InnoDB issues:

Let us go back to MySQL now:

Try to run mysql_upgrade

Only way to access the data at this point is to use skip-grant-tables. But that is unsustainable and in any case I continue to get errors in the log.

Summarizing

Once I have an instance built with MySQL 5.7, I can easily shift to Percona Server 5.7, and eventually go back. I can migrate to MariaDB, but this implies configuration changes and must run mysql_update to modify the core system tables. Finally cannot rollback to MySQL, modifications done by MariaDB are not allowing it.

Version 8/10.5

MySQL

Move to Percona Server

No error of any type and no changes as for 5.7

Let us rollback to MySQL

Perfect and no issue.

Let us now try MariaDB

Ok let see if I remove the optimizer settings:

No way to have it working unless a logical dump

Summarizing

As for MySQL 5.7 once I have the instance built with MySQL 8.0.22 I can move to Percona 8.0.22 and roll back with no issues. Moving to MariaDB is not possible at all unless a full LOGICAL dump, which I really want to see how long it could take when you have TeraBytes of data.

Another weirdo

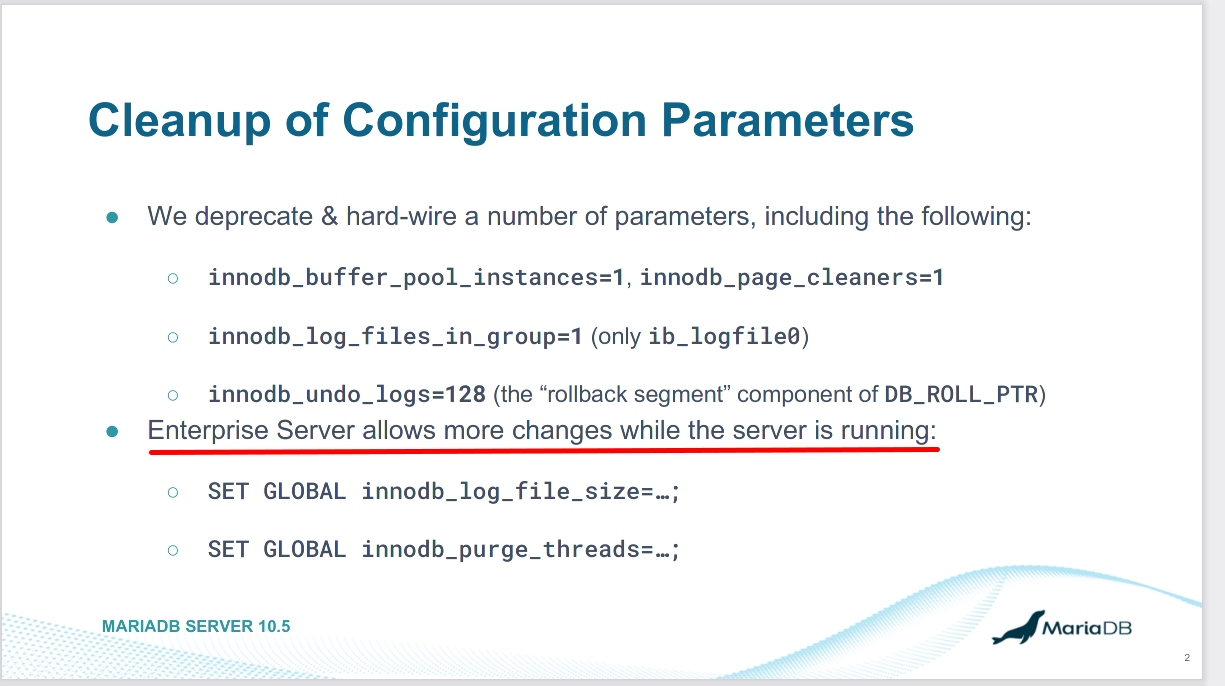

While I was working with MariaDB I also review the documentation and my eyes were capture by this (https://mariadb.com/docs/reference/es/system-variables/innodb_purge_threads/)

“WoW”, I thought, “DYNAMIC settings for innodb_purge_threads this is cool”. Let me try it.

Whaaat??

Then I realized I was on the page of “Enterprise Documentation”. Checking with the community version https://mariadb.com/kb/en/innodb-system-variables/#innodb_purge_threads innodb_purge_threads is still NOT dynamic, and after checking even more I also found Innodb_log_file_size, same story.

I was very badly impressed by this, for several reasons but want to mention the two most important:

- MariaDB is declaring over and over to be the one truly open source and community oriented. But where is the support for the community here?

- Is InnoDB not owned by Oracle? Isn't MariaDB using it because Oracle released it under the GPLv2? So why are they modifying the code and not returning it to the open source community? I am not an expert in legal things, but that sounds to me an infringement of the license.

Conclusions

Drop-in replacement has a very specific meaning, and it must be used with caution. It also brings several strings attached, one of these is that replacing binaries is not a one way only solution. Attaching the term limited to drop-in as in MariaDB documentation, it is not correct, it still evokes an inapplicable concept and can be seen as misleading advertising.

As we can see the only real drop-in replacement for MySQL is Percona Server, MariaDB is not even close to it, too many changes in the configuration file, and of course the need to go for a logical dump is not even remotely the right way to go.

In short MariaDB is obviously a different product, as already stated by many in different articles, that is diverging more and more.

The only reason for which MariaDB continues to play the drop-in game with MySQL, for me, is because they continue to use the traction MySQL has on the community and the market, to attract customers. They also absorb from the work done by Oracle and Percona but as shown with innodb_purge_threads/innodb_log_file_size, they do not hesitate to keep significant features only for Enterprise version, without sharing with the community.

As said many times, MariaDB has huge minds in development, starting from Monty down to any level of developer/DBA. We must recognize and respect that, as well we must recognize the great work they do at technical level.

I would love to see more collaboration, but I also understand the need to be something different to survive as a company.

What I cannot accept is when we have claims that are not real like the drop-in replacement (also if limited), or even worse the case of innodb_purge_threads/innodb_log_file_size.

That is wrong and as a strong advocate of open source and a lover of the MySQL/MariaDB community I feel I need to voice my concern.

Great MySQL to all

Reference

https://www.percona.com/resources/webinars/differences-between-mariadb%C2%AE-and-mysql%C2%AE

https://mariadb.com/docs/reference/es/system-variables/innodb_purge_threads/

https://mariadb.com/kb/en/innodb-system-variables/#innodb_purge_threads